1. Introduction

Large language models (LLMs) are very large deep learning models that aim to predict and generate sensible text in a natural or symbolic human language. LLMs, in other words, are trained to model human language and even symbolic language such as code. We say that LLMs are trained for the task of language modeling.

We call models that can generate any form of content as generative models; for examples, models to generate images, videos, or music. Since LLMs generate text, they are also generative models.

In this blog post, we look at the difference between LLMs and earlier language models (LMs), we briefly review the architecture and training strategies for LLMs, and explain why they have been astoundingly successful at a wide variety of language tasks. We defer a detailed discussion of more fundamental technical topics such as language modeling, embeddings, and Transformer models to later blog posts.

2. Background

2.1 Language Modeling

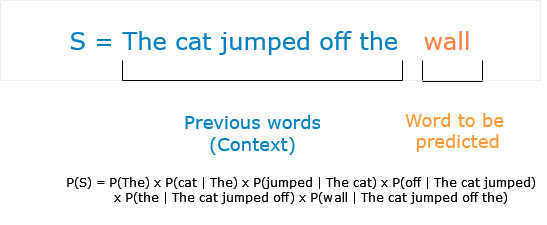

The essence of tasks such as text translation, question-answering, making a chatbot is learning how to model human language. An LM is basically a probabilistic model that assigns a probability \[ P(w_1, w_2, \dots, w_n) \] to every finite sequence of words \(w_1, \dots, w_n\) (grammatical or not). This joint probability can be represented in terms of conditional probabilities as

\[ P(w_1, w_2, \dots, w_n) = P(w_1) \times P(w_2|w_1) \times P(w_3|w_1, w_2) \dots \times P(w_n|w_1, w_2, \dots, w_{n-1}).\]

Hence, for the sentence “I am going to the market”, we have

\[P(\text{I am going to the market}) = P(\text{I}) \times P(\text{am|I}) \times P(\text{going|I am}) \times P(\text{to|I am going}) \] \[\times P(\text{the|I am going to}) \times P(\text{market|I am going to the}).\]

Hence, an LM needs to learn these conditional probabilities for many different groups of words and phrases. With a large text dataset at hand, this aim can be formulated as a machine learning task in two ways:

- prediction of next word in the text given the previous words/phrases in a sentence; for example,

- prediction of masked words or phrases given the rest of the words in the sentence (called masked language modeling); for example,

Hence, the system creates its own prediction challenges from the text corpus. This learning paradigm where we don’t provide explicit training labels is called self-supervised learning. Since we do away with the need for expensive labeling, use of large unlabeled text datasets, scraped from the Web, becomes possible. The concept is used not just in the domain of natural language processing (NLP), but for computer vision as well.

Prediction of masked/next word(s) is powerful because doing it well calls for different kinds of understanding; every form of linguistic and world knowledge from grammar, sentence structure, word meaning, to facts help one to perform this task better. In performing language modeling, a model gathers a wide understanding of language and the world represented in the training corpus.

2.1 Transformers

Transformers are NLP models that take in text (a sequence of words) and output another text to perform some task such as translation or question-answering.

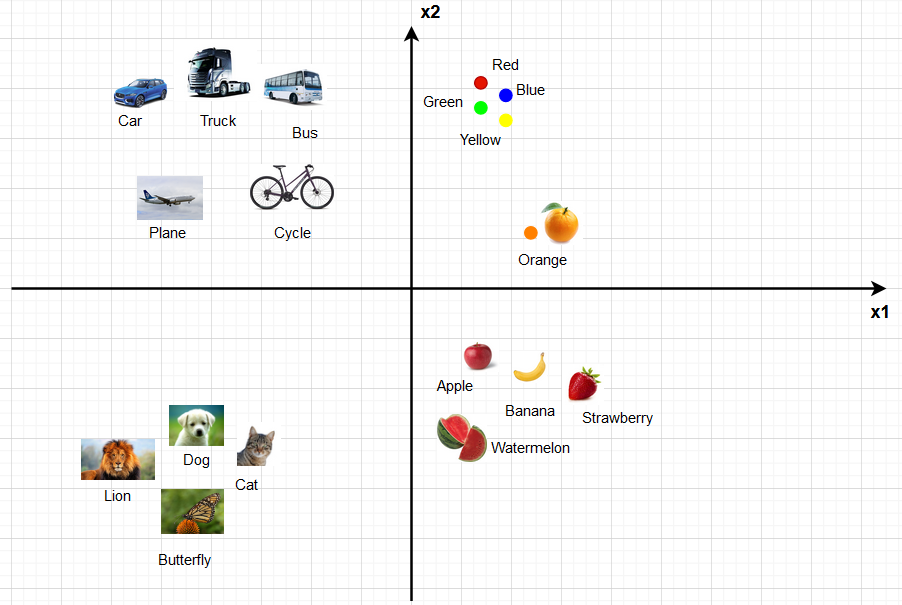

2.1.1. Embeddings

An embedding represents a word by a position in a real-valued vector space whose dimension can be in hundreds or thousands. The proximity of embeddings of two different words in this space in an indication of their semantic similarity.

2.1.2. Encoder-Decoder Models

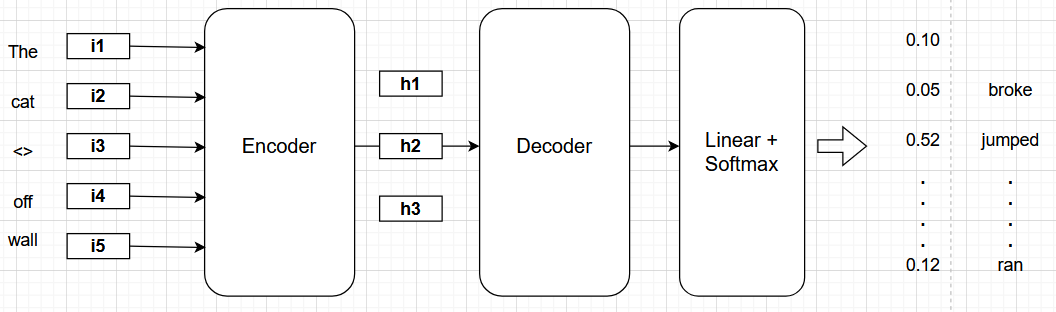

The Transformer, at a high level, consists of two main components - an encoder and a decoder. The encoder takes in the word embeddings and transforms them by a sequence of operations to produce another set of ‘encoded’ embeddings. In each operation of that sequence, we allow the embeddings of all words to ‘interact’ and influence each other. The effect is that the new set of ‘encoded embeddings’ encapsulates higher-level context of the sentence.

The decoder takes in the set of embedded vectors to produce a sequence of real-valued vectors. Once we pass this sequence through a linear layer and softmax, we obtain the desired output. The softmax function normalizes the values in a vector to a probability distribution, bringing higher values closer to 1 and lower values to 0.

2.1.3. Self-attention

An key feature of Transformer models is their use of attention during the encoding and decoding phases. Attention layers are special architectural features that are present in encoder and decoder. In encoder, they enable the model to pay specific attention to certain words in the input sentence when trying to form a representation of each word. In decoder, they enable the model to pay attention to already produced output when generating the next output word.

It’s clear that interpretation of words and phrases is context dependent and depends on the remaining words/phrases in the sentences. Crucially, we only need a few context words, and not the whole sentence, to determine the meaning/representation of a particular word. For example, the meaning of the word bat in Cricket is played with a bat can be inferred by looking at Cricket and that in Bats are nocturnal creatures by looking at creatures.

Attention also lends itself to parallel computation, thereby boosting the speed at which powerful NLP models can be trained on GPUs.

3. Large Language Models

A machine learning model trained on vast quantity of data at scale (generally using self-supervision) can be adapted to a wide range of downstream tasks; such a model is called a foundation model. Large language models are a specific type of foundation model for NLP tasks and they make use of the Transformer architecture we discussed above. Some examples of LLMs are BERT, GPT-3, and T5.

Though LLMs are based on the already established ideas of deep learning and self-supervised learning, it is the scale of these models and the datasets on which they are trained that make possible the astonishing performance on a wide variety of tasks. This scale is facilitated by improvements in computer hardware (GPU and memory), development of novel Transformer architecture, and the availability of huge datasets. Self-supervised learning is important to the ability to use huge data, since annotation is not required in this case.

The significance of foundation models lies in two concepts: emergence and homogenization. Emergence means that the foundation models with their billions of parameters can be adapted to a wide variety of tasks, through mere textual description of the task (prompts); that is, they are able to do in-context learning for many tasks for which they were neither trained nor anticipated to be used for. Homogenization means that there exists a handful of powerful base foundation models, such as BERT or T5, from which almost all state-of-the-art NLP models are derived through fine-tuning.

3.1 Types of LLMs

The original Transformer architecture consists of two parts - encoder and decoder. Depending on the task at hand, researchers use either of the parts or both, giving rise to three types of LLMs:

Encoder-only LLMs (eg. BERT) - This variant uses only the encoder part. It is designed to produce dense embeddings for the input word sequence. While pretraining using masked word prediction, one attaches an un-embedding layer, which produces one-hot encoded words. For downstream tasks, we remove the un-emdedding layer. A small task-specific model is trained on top of the encoder-only model making use of the embeddings. Such models are most suitable for tasks like missing word prediction and document classification.

Decoder-only LLMs (eg. GPT) - This variant uses only the decoder part of the Transformer. It is mainly used for text generation (output) from a given prompt (input). The input sequence or prompt is first encoded to a single large embedding from which the decoder outputs a sequence of words in an auto-regressive manner. Auto-regression means while generating a word, the model can refer to the previously generated words.

Encoder-decoder (eg. T5) - This uses both encoder and decoder parts, making such a model quite large. It is used for tasks like language translation.

While encoder-decoder models are generalizations of encoder-only and decoder-only, it’s better to use smaller models with less parameters if the task calls for that. Encoder-only models are good for understanding tasks, decoder-only for generation tasks, and encoder-decoder for tasks where both inputs and outputs can be large sequences.

3.2 Training LLMs

LLMs typically follow the paradigm of pretraining and transfer learning.

Pretraining - via self-supervised learning on a large textual corpus such as Wikipedia or GitHub. The resulting model is called pre-trained language model (PLM) and it can be adapted to a wide variety of downstream tasks. This is the part which takes a huge amount of training time and compute resources due to the size of the model and training data.

Transfer learning - adapting the model to a specific task. Since the PLM has already acquired a lot of language and factual knowledge, this step needs a tiny amount of data and compute. This can be done via:

Fine-tuning - The parameters of PLM are adjusted by training with additional data relevant to the application. These can be of 3 types:

Unsupervised: Suppose one is building a programming co-pilot using PLMs. The standard PLMs are usually pre-trained on internet text such as Wikipedia. We can now fine-tune them on code text, again using self-supervised learning.

Supervised: PLMs are pre-trained for next or masked word prediction. If we want to use them for, let’s say, document sentiment analysis, we need to replace the output layer with a new one and train it with input-output pairs of texts and the associated sentiments.

Reinforcement Learning from Human Feedback (RLHF): This approach, mainly used by text generation models, consists of repeated execution of the following:

- The model is given a prompt and it generates multiple plausible answers.

- The different answers are ranked by a human from best to worst.

- The scores of the different answers are backpropagated.

Prompt engineering - Fine-tuning used to be the only paradigm for transfer learning until recently. Now more powerful PLMs like GPT-3 only require a prompt and no explicit training (zero-shot learning) or a handful of examples (few-shot learning) to adapt to a new task.

4. Challenges and Research Directions

4.1 Multi-modality and Environment Interaction

A lot of research work is being done currently on training foundation models using data from different modalities such as video and audio. By augmenting learning with multiple sensory data and knowledge, we provide stronger learning signals and increase learning speed.

One can also situate the foundation model in an environment where it can interact with other agents and objects; such models are called embodied foundation models. This can help the model learn cause and effect like humans by means of physically interacting with surroundings.

4.2 Understanding and Intelligence

The impressive performance of LLMs on a wide variety of tasks, even on ones they were not trained for, has given rise to debates about whether or not these models are actually learn language in the way humans do 1 or whether they are just elaborate rewriting systems devoid of meaning.

The first position seems partly convincing because state-of-the-art LLMs remain susceptible to unpredictable and unhumanlike intelligence. Sometimes LLMs generate text and responses that seem syntactically correct and natural but in reality they are incorrect factually - this is called hallucination.

On the other hand, researchers argue that given the variety and difficulty of tasks multi-modal foundation models like GPT-4 can solve, we can confidently say they exhibit aspects of intelligence2. There’s been some recent work on understanding in-context learning which posits that perhaps these large foundation models have smaller machine-learning models inside them that the big model can train to perform a new task3. Clearly, some aspects of LLM behavior indeed seem intelligent, but not exactly in human way; this calls for a rethinking and expansion of the meaning of intelligence.

4.3 Alignment

Alignment refers to the process of ensuring that LLMs behave in harmony with human values and preferences. An aligned LLM is trustworthy. The characteristics needed for an LLM to be used with trust in the real-world are reliability, safety, fairness, resistance to misuse, explainability, and robustness 4. Out of these, here we only consider fairness.

Fairness - Due to the huge size of training data and LLMs, we don’t clealy understand the biases encapsulated in these models nor have an estimate of safety for use in critical applications. Homogenization is also a liability, since all derived NLP models may inherit the same harmful biases of a few foundation models. This calls for investing significant resources into curating and documenting LLM training data.

Another concern is that with more widespread use of LLMs, more content on the web is likely to be LLM-generated. When future models are trained on web data, bias is likely to be propagated and the models can become less capable - a phenomenon known as model collapse 5.

Footnotes

Bender, Emily M., et al. “On the dangers of stochastic parrots: Can language models be too big?🦜.” Proceedings of the 2021 ACM conference on fairness, accountability, and transparency. 2021.↩︎

Bubeck, Sébastien, et al. “Sparks of artificial general intelligence: Early experiments with gpt-4.” arXiv preprint arXiv:2303.12712 (2023).↩︎

Akyürek, Ekin, et al. “What learning algorithm is in-context learning? investigations with linear models.” arXiv preprint arXiv:2211.15661 (2022).↩︎

Liu, Yang, et al. “Trustworthy LLMs: a Survey and Guideline for Evaluating Large Language Models’ Alignment.” arXiv preprint arXiv:2308.05374 (2023).↩︎

Shumailov, Ilia, et al. “The Curse of Recursion: Training on Generated Data Makes Models Forget” arXiv preprint 2305.17493↩︎